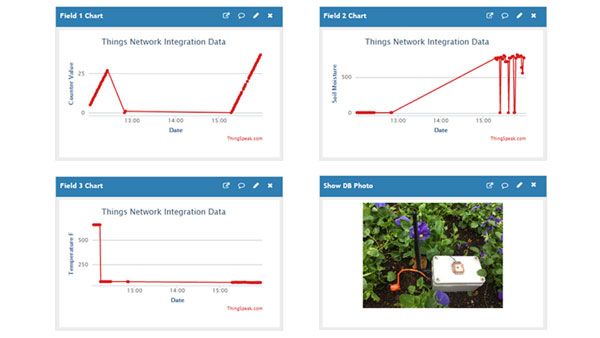

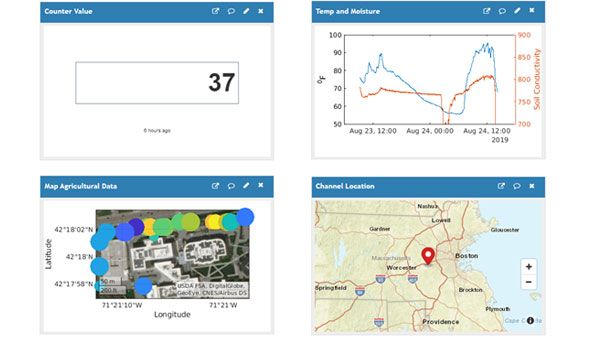

Welcome to the ThingSpeak community site. We’re sharing the IoT. Tell us about your project or ask us about ours.

Welcome to the ThingSpeak Community

Moderator:

Christopher Stapels

The community for students, researchers, and engineers looking to use MATLAB, Simulink, and ThingSpeak for Internet of Things applications. You can find the latest ThingSpeak news, tutorials to jump-start your next IoT project, and a forum to engage in a discussion on your latest cloud-based project. You can see answers to problems other users have solved and share how you solved a problem.